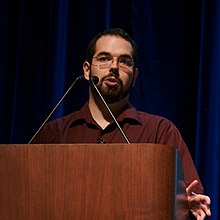

Eliezer Yudkowsky

| Eliezer Yudkowsky | |

|---|---|

|

|

| Born | September 11, 1979 |

| Nationality | American |

| Spouse | Brienne Yudkowsky (m. 2013) |

Eliezer Shlomo Yudkowsky (born September 11, 1979) is an American artificial intelligence researcher known for popularizing the idea of friendly artificial intelligence. He is a co-founder and research fellow at the Machine Intelligence Research Institute, a private research nonprofit based in Berkeley, California.

Yudkowsky's views on the safety challenges posed by future generations of AI systems are discussed in the standard undergraduate textbook in AI, Stuart Russell and Peter Norvig's Artificial Intelligence: A Modern Approach. Noting the difficulty of formally specifying general-purpose goals by hand, Russell and Norvig cite Yudkowsky's proposal that autonomous and adaptive systems be designed to learn correct behavior over time:

Yudkowsky (2008) goes into more detail about how to design a Friendly AI. He asserts that friendliness (a desire not to harm humans) should be designed in from the start, but that the designers should recognize both that their own designs may be flawed, and that the robot will learn and evolve over time. Thus the challenge is one of mechanism design – to design a mechanism for evolving AI under a system of checks and balances, and to give the systems utility functions that will remain friendly in the face of such changes.

Citing Steve Omohundro's idea of instrumental convergence, Russell and Norvig caution that autonomous decision-making systems with poorly designed goals would have default incentives to treat humans adversarially, or as dispensable resources, unless specifically designed to counter such incentives: "even if you only want your program to play chess or prove theorems, if you give it the capability to learn and alter itself, you need safeguards".

In response to the instrumental convergence concern, Yudkowsky and other MIRI researchers have recommended that work be done to specify software agents that converge on safe default behaviors even when their goals are misspecified. The Future of Life Institute (FLI) summarizes this research program in the Open Letter on Artificial Intelligence research priorities document:

...

Wikipedia