Binomial Distribution

|

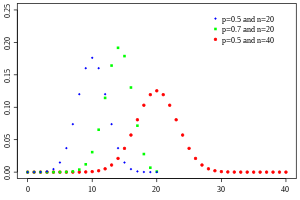

Probability mass function

|

|

|

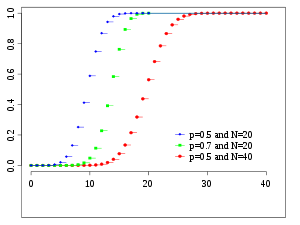

Cumulative distribution function

|

|

| Notation | B(n, p) |

|---|---|

| Parameters |

n ∈ N0 — number of trials p ∈ [0,1] — success probability in each trial |

| k ∈ { 0, …, n } — number of successes | |

| pmf | |

| CDF | |

| Mean | |

| Median | or |

| or | |

| Variance | |

| Skewness | |

| Ex. kurtosis | |

| Entropy |

in . For , use the natural log in the log. |

| MGF | |

| PGF | |

| Fisher information |

(for fixed ) |

In probability theory and statistics, the binomial distribution with parameters n and p is the discrete probability distribution of the number of successes in a sequence of n independent yes/no experiments, each of which yields success with probability p. A success/failure experiment is also called a Bernoulli experiment or Bernoulli trial; when n = 1, the binomial distribution is a Bernoulli distribution. The binomial distribution is the basis for the popular binomial test of statistical significance.

The binomial distribution is frequently used to model the number of successes in a sample of size n drawn from a population of size N. If the sampling is carried out without replacement, the draws are not independent and so the resulting distribution is a hypergeometric distribution, not a binomial one. However, for N much larger than n, the binomial distribution remains a good approximation, and widely used.

In general, if the random variable X follows the binomial distribution with parameters n ∈ ℕ and p ∈ [0,1], we write X ~ B(n, p). The probability of getting exactly k successes in n trials is given by the probability mass function:

...

Wikipedia

![G(z)=\left[(1-p)+pz\right]^{n}.](https://wikimedia.org/api/rest_v1/media/math/render/svg/b882d226739a271dd2d513336fb42612be9f714f)